Artificial Intelligence (AI) is a dynamic field, and one of its most promising branches is Generative AI. This subfield, leveraging transformer architecture, is dedicated to creating intelligent systems to produce entirely new content, from lifelike images to captivating musical compositions and even human-like text. The rapid evolution of Generative AI is reshaping numerous industries, with transformative applications in:

- Drug Discovery: AI can generate new molecule structures with desired properties, accelerating drug development.

- Creative Content Generation: AI can generate scripts, poems, musical pieces, and even realistic images, fostering new avenues for creative expression.

- Machine Translation: Generative AI is revolutionizing machine translation by producing more natural and nuanced translations that capture the essence of the source language.

At the heart of this generative revolution lies a robust architecture called the Transformer.

Traditional Recurrent Neural Networks (RNNs) were the backbone of language processing for many years. However, their struggle with capturing long-range dependencies in sequences hindered their effectiveness in complex tasks like text generation. RNNs process information sequentially, making understanding relationships between words far apart in a sentence difficult.

This challenge led to the development of new models, prompting the question: what is transformer architecture? Transformer in architecture addresses these limitations by simultaneously processing all words in a sequence, allowing for better capture of long-range dependencies and improved performance in complex language tasks.

Transformers emerged in 2017 and marked a new era for natural language processing (NLP). This innovative software architecture not only overcomes the limitations of RNNs but also offers several advantages, making it an ideal choice for generative AI tasks and instilling confidence in the future of AI.

In the next section, we’ll explore the inner workings of transformers and how they revolutionized the field of generative AI.

Transformer Architecture: A Detailed Look

Traditional Recurrent Neural Networks (RNNs) were the dominant architecture for sequence-based tasks like language processing.

However, they needed help capturing long-range dependencies within sequences, which could have improved their performance. This is where Transformer architectures emerged, revolutionizing the field of Natural Language Processing (NLP) by effectively modeling these long-range relationships.

The Core Components of a Transformer

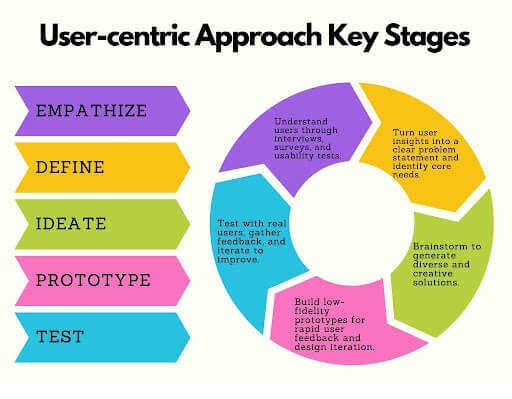

The fundamental elements of transformer architecture strengthen it; each is essential to processing and comprehending sequential data. Let’s look into these critical elements:

- Encoder-decoder Structure: Imagine a translator. The encoder part of the transformer “reads” the input sequence (source language) and encodes it into a contextual representation. This representation is then passed to the decoder, which acts like the translator, generating the output sequence (target language) based on the encoded context.

This structure allows transformers to handle machine translation and tasks like text summarization, where understanding the entire input sequence is crucial. - Self-Attention Mechanism: This is the heart of the transformer architecture. Unlike RNNs, which process sequences sequentially, the self-attention mechanism allows the transformer to attend to all parts of the input sequence simultaneously.

Each element in the sequence “attends” to other components, assessing their relevance and importance. As a result, the model can capture long-range dependencies that may be dispersed widely throughout the sequence. A study by Vaswani et al., 2017 demonstrated that transformers with self-attention significantly outperform RNNs on various language modeling tasks. - Positional Encoding: Since the self-attention mechanism considers all elements simultaneously, it lacks the inherent order information present in a sequence. Positional encoding addresses this by adding information about each element’s relative or absolute position within the sequence.

This allows the model to distinguish between words with similar meanings but different positions in a sentence (e.g., “play the music” vs. “music plays on”). - Feed-forward Networks are standard neural network layers that further process the encoded information from the self-attention mechanism. They add non-linearity to the model, allowing it to learn complex relationships within the sequence data.

The Power of Self-AttentionThe self-attention mechanism is the game-changer in vision transformer architecture. By enabling the model to analyze all parts of the sequence simultaneously and capture long-range dependencies, transformers can effectively understand complex relationships within language.

This skill has made notable progress across various NLP tasks, from machine translation and text summarization to question answering and sentiment analysis.

Transformer Variants for Generative Tasks

The realm of generative AI thrives on models capable of learning complex patterns from vast amounts of data and then leveraging that knowledge to create entirely new content. This is where transformer architecture is explained, but to unlock their full potential, a technique known as pre-training comes into play.

Pre-training: The Secret Sauce of Generative AI

Pre-training involves training a transformer model architecture on a massive unlabeled text or code dataset. This unsupervised learning process allows the model to grasp the fundamental building blocks of language, such as word relationships and syntactic structures.

This pre-trained model is a robust foundation for building specific generative tasks. Studies by OpenAI have shown that pre-training a transformer model on a dataset of text and code can significantly improve its performance on various downstream tasks compared to models trained from scratch.

Transformer Variants Leading the Generative AI Charge

The transformer architecture’s flexibility has fostered the development of numerous generative AI models, each with its strengths and applications:

- BERT (Bidirectional): Introduced in 2018 by Google AI, BERT revolutionized natural language processing (NLP). Unlike traditional language models that process text sequentially, BERT utilizes a masked language modeling approach.

Using this method, a sentence’s random words are hidden, and the model guesses the hidden words from the context.

Through bidirectional training, BERT can grasp word relationships comprehensively, making it an effective tool for various downstream applications, including text summarization, sentiment analysis, and question-answering.

A 2019 study by Devlin et al. found that BERT achieved state-of-the-art results on 11 different NLP tasks, showcasing its versatility and effectiveness.

- GPT (Generative Pre-training Transformer): Developed by OpenAI, GPT is a family of generative pre-trained transformer models. Different versions, like GPT-2, GPT-3, and the recently announced GPT-4, have progressively pushed the boundaries of what’s possible in text generation.

Large-scale text and code datasets are used to train these models, enabling them to generate realistic and coherent text formats, like poems, code, scripts, musical pieces, emails, and letters. GPT-3, for instance, has gained significant attention for its ability to generate human-quality text, translate languages, and write creative content.

T5 (Text-to-Text Transfer Transformer): Introduced by Google AI in 2020, T5 takes a unique approach to NLP tasks. Unlike other models that require specific architectures for different tasks (e.g., question answering vs. summarization), T5 employs a unified approach. It utilizes a single encoder-decoder structure, defining the task within the input text.

This approach streamlines the training process and allows T5 to tackle a wide range of NLP tasks with impressive performance. A 2020 study by Raffel et al. demonstrated that T5 achieved state-of-the-art results on various NLP benchmarks, highlighting its effectiveness in handling diverse tasks.

The Power and Potential of Transformers in Generative AI

Transformer architectures have not just influenced but revolutionized the landscape of generative AI, catapulting it to unprecedented levels of capability. Let’s explore the key advantages that firmly establish transformers as the dominant architecture in this domain, igniting our imagination for the future.

- Exceptional Long Sequence Handling: Unlike recurrent neural networks (RNNs) that struggle with long-range dependencies, transformers excel at processing lengthy sequences.

The self-attention mechanism allows transformers to analyze all parts of a sequence simultaneously, capturing complex relationships between words even if they are far apart.

This is particularly advantageous for tasks like machine translation, where understanding the context of the entire sentence is crucial for accurate translation. A study by Vaswani et al., 2017 demonstrated that transformers achieved state-of-the-art results in machine translation tasks, significantly outperforming RNN-based models. - Faster Training Through Parallelism: Transformers are inherently parallelizable, meaning different model parts can be trained simultaneously. This parallelization capability translates to significantly faster training times compared to RNNs.

A research paper by Jia et al. (2016) showed that transformers can be trained on large datasets in a fraction of the time it takes to train RNNs, making them ideal for applications requiring rapid model development. - Unmatched Versatility for NLP Tasks: The power of transformers extends beyond specific tasks. Their ability to handle long sequences and capture complex relationships makes them adaptable to various natural language processing applications.

Transformers are proving their effectiveness across the NLP spectrum, from text summarization and sentiment analysis to question answering and code generation.

A 2022 study by Brown et al. showcased the versatility of GPT-3, a powerful transformer-based model, by demonstrating its ability to perform various NLP tasks accurately.

The Future of Transformers in Generative AI

The realm of transformer architecture is constantly evolving. Researchers are actively exploring advancements like:

- Efficient Transformer Architectures: Optimizing transformer models for memory usage and computational efficiency will enable their deployment on resource-constrained devices.

- Interpretability of Transformers: Enhancing our understanding of how transformers make decisions will foster greater trust and control in their applications.

- Multimodal Transformers: Integrating transformers with other modalities, such as vision and audio, promises exciting possibilities for tasks like image captioning and video generation.

Case Studies

Transformer architectures have revolutionized the field of generative AI, powering a wide range of groundbreaking applications. Let’s explore some real-world examples:

Case Study 1: Natural Language Processing (NLP)

- Language Translation: Transformer-based models like Google’s Neural Machine Translation (NMT) system have significantly improved machine translation quality. These models can handle long sentences and complex linguistic structures more effectively than previous approaches.

A study by [Wu et al., 2016] demonstrated that Google’s NMT system achieved a 28.4 BLEU score on the WMT14 English-to-French translation task, surpassing the performance of traditional phrase-based systems. - Text Summarization: Transformers have excelled in generating concise and informative summaries of lengthy documents. Models like Facebook’s BART (Bidirectional Encoder Representations from Transformers) have achieved state-of-the-art results in abstractive summarization tasks.

Case Study 2: Image and Video Generation

- Image Generation: Transformer-based models like OpenAI’s DALL-E and Google’s Imagen have demonstrated remarkable capabilities in generating highly realistic and creative images from textual descriptions. These models have opened up new possibilities for artistic expression and content creation.

- Video Generation: While still in its early stages, research is exploring the application of transformers for video generation tasks. Models like Google’s VideoGPT have shown promise in generating coherent and visually appealing video sequences.

Case Study 3: Other Domains

- Speech Recognition: Transformers have been adapted for speech recognition tasks, achieving competitive performance architecture with models like Meta AI’s Wav2Vec 2.0.

- Drug Discovery: Researchers are exploring using transformers to generate novel molecular structures with desired properties, accelerating drug discovery.

Conclusion

Understanding transformer architecture is fundamental to grasping the advancements in generative AI, from BERT to GPT-4. The transformer architecture, first presented by Vaswani et al. in 2017, substantially changed the area of natural language processing by allowing models to capture context and long-range dependencies with previously unheard-of precision and speed. This architecture has since become the backbone of numerous state-of-the-art models.

By exploring transformer architecture, we see how its innovative use of self-attention mechanisms and parallel processing capabilities has drastically improved the performance and scalability of AI models.

BERT’s bidirectional context understanding and GPT-4’s autoregressive text generation are prime examples of how transformers can be tailored for specific tasks, leading to significant language understanding and generation breakthroughs.

The impact of transformer architecture on generative AI is profound. It enhances the capabilities of AI models and broadens the scope of applications, from chatbots and translation services to advanced research tools and creative content generation. This versatility should excite us about the diverse applications of AI in the future.

In summary, transformer architecture is a cornerstone of modern AI, driving progress in how machines understand and generate human language. Its ongoing evolution, from BERT to GPT-4, underscores its transformative power, giving us hope for continued innovation and deeper integration of AI into our daily lives.

How can [x]cube LABS Help?

[x]cube has been AI-native from the beginning, and we’ve been working through various versions of AI tech for over a decade. For example, we’ve been working with the developer interface of Bert and GPT even before the public release of ChatGPT.

One of our initiatives has led to the OCR scan rate improving significantly for a complex extraction project. We’ve also been using Gen AI for projects ranging from object recognition to prediction improvement, as well as chat-based interfaces.

Generative AI Services from [x]cube LABS:

- Neural Search: Revolutionize your search experience with AI-powered neural search models that use deep neural networks and transformers to understand and anticipate user queries, providing precise, context-aware results. Say goodbye to irrelevant results and hello to efficient, intuitive searching.

- Fine Tuned Domain LLMs: Tailor language models to your specific industry for high-quality text generation, from product descriptions to marketing copy and technical documentation. Our models are also fine-tuned for NLP tasks like sentiment analysis, entity recognition, and language understanding.

- Creative Design: Generate unique logos, graphics, and visual designs with our generative AI services based on specific inputs and preferences.

- Data Augmentation: Enhance your machine learning training data with synthetic samples that closely mirror real data, improving model performance and generalization.

- Natural Language Processing (NLP) Services: Handle tasks such as sentiment analysis, language translation, text summarization, and question-answering systems with our AI-powered NLP services.

- Tutor Frameworks: Launch personalized courses with our plug-and-play Tutor Frameworks that track progress and tailor educational content to each learner’s journey, perfect for organizational learning and development initiatives.

Interested in transforming your business with generative AI? Talk to our experts over a FREE consultation today!

1-800-805-5783

1-800-805-5783