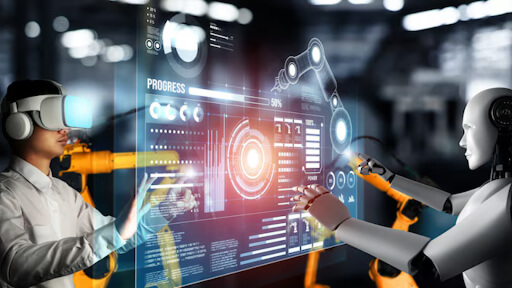

We’re entering the era of intelligent agents—systems that can think, plan, and act on their own. So, Artificial Intelligence isn’t just about intelligent chatbots or automated replies anymore. At the heart of this revolution are AI Agent Frameworks. These powerful toolkits are helping businesses automate complex processes, improve customer experiences, and unlock the full potential of large language models (LLMs).

But before you dive in, it’s essential to understand what these frameworks are, how they work, and what they mean for your organization’s future. This guide breaks it down in simple, human terms.

Why AI Agent Frameworks Are Gaining Traction

Let’s start with the big picture. According to Precedence Research, the global market for AI agents was valued at $3.7 billion in 2023 and is projected to surpass $103 billion by 2032. That represents a massive annual growth rate of 44.9%. Something big is happening.

So why the sudden good? Businesses are seeking smarter, more adaptive tools—not just software that reacts, but systems that can make decisions and act on goals with minimal human intervention. AI Agent Frameworks make this possible by providing developers with a foundation to build intelligent systems quickly and efficiently.

A 2025 survey revealed that 78% of UK C-suite executives are already utilizing AI agents in some capacity. These aren’t just pilot projects—they’re delivering real value in the form of cost savings, faster workflows, and happier customers.

Breaking It Down: What Is an AI Agent Framework?

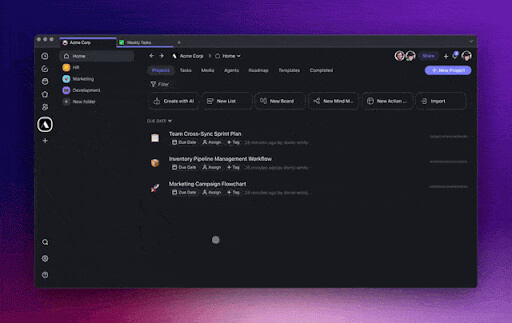

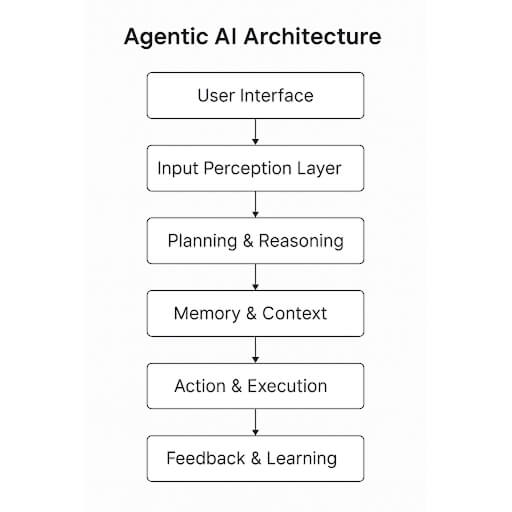

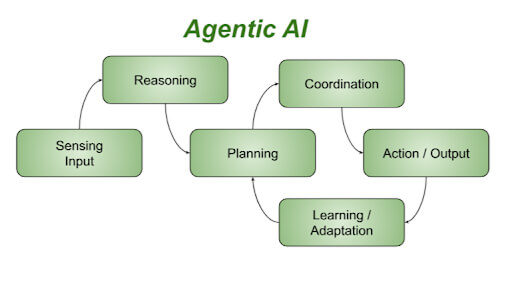

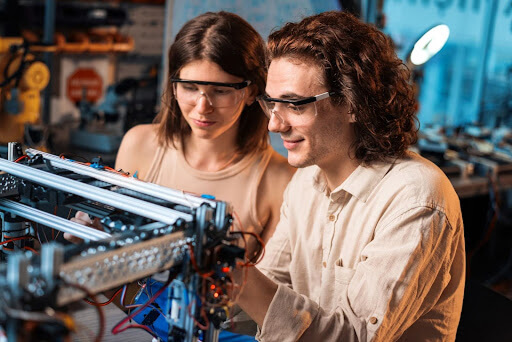

Think of an AI Agent Framework as a toolkit. Just like you’d use a construction kit to build a house, these frameworks provide the materials and blueprints to create digital agents that can:

- Make decisions based on data and rules.

- Interact with APIs, databases, and software tools.

- Remember past actions and adjust strategies.

- Collaborate with other agents or human users.

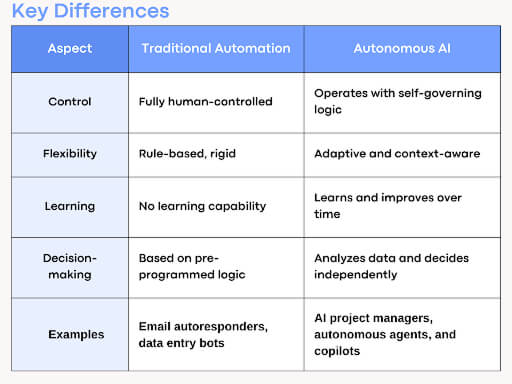

Unlike traditional AI models that only react to specific inputs, AI agents are more dynamic in their responses. They can plan, adjust their course when needed, and act independently to achieve their goals.

Some key features you’ll find in these frameworks:

- Planning modules to help agents think through tasks

- Interfaces to connect with your tools and data

- Memory systems to track what’s happened before

- Communication tools for team-based agents

- Monitoring dashboards to keep an eye on performance

All of this combines to create agents that aren’t just smart—they’re capable.

A Quick Look at the Best AI Agent Frameworks 2025

There are numerous agent frameworks available, including AI Agent Frameworks, each with its strengths. Here are a few top AI Agent frameworks you might want to explore:

- LangChain: Great for chaining tasks and working with LLMs like GPT-4.

- AutoGen & Microsoft Autogen: Built for multi-agent conversations and task orchestration.

- Semantic Kernel: A Microsoft-backed tool that plays well with .NET and C#.

- CrewAI: Ideal if you want agents to collaborate and split up work.

- BabyAGI & ReAct: Lightweight frameworks for fast prototyping.

- Hugging Face + Accelerate: Perfect for building custom ML-powered agents.

- JADE: A robust option for traditional industries like logistics.

- Rasa: Well-suited for conversational agents with strong NLP capabilities.

The best AI Agent frameworks for you depend on your goals, tech stack, and level of AI maturity.

What’s in It for Your Business?

Adopting AI Agent Frameworks can be a game-changer. Here’s how businesses are already benefiting:

- Faster project launches with reusable components

- Up to 35% cost reduction, thanks to automation

- 55% increase in productivity

- Scalability across departments and workflows

- Greater accuracy and fewer human errors

- 24/7 operation, which is a big plus for global teams

It’s not just about saving time and money—AI agents can improve the quality of decisions and services you deliver.

Watch Out: Potential Pitfalls to Avoid

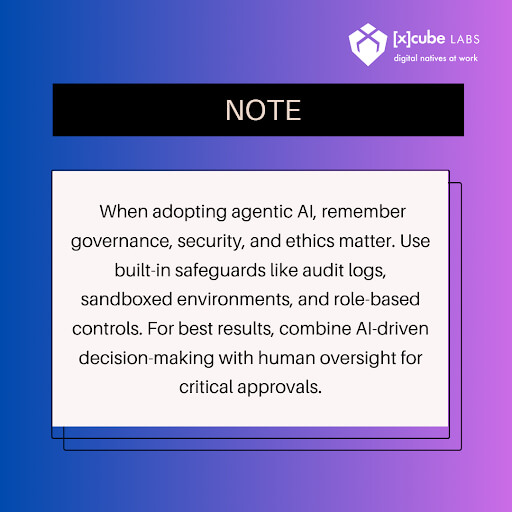

Of course, no technology is without its risks. Here are a few things to keep in mind:

- Security matters: In 2024, 23% of IT professionals reported issues with agents exposing credentials.

- Integration is challenging: A Gartner study revealed that 95% of CIOs found it difficult to connect agents with legacy systems.

- Data privacy is key: Nearly half of AI developers reported that controlling sensitive data remains a significant challenge.

- You’ll need skilled people: These systems aren’t plug-and-play. You’ll need teams who understand both AI and your business.

- Black-box behavior: Without oversight, agents might make unpredictable choices.

How to Approach AI Agent Adoption the Smart Way

If you’re thinking about rolling out AI agents in your organization, here are a few smart steps to take:

- Start small: Pick a single use case, like automating responses in customer service or processing invoices.

- Select the proper framework that aligns with your existing infrastructure and objectives.

- Run pilot programs: Test the waters before scaling up.

- Set up governance: Track actions, assign permissions, and audit behavior.

- Create a cross-functional team: Don’t leave this to IT alone. Bring in operations, legal, and customer experience personnel as well.

- Train your people: According to Business Insider, 69% of tech leaders plan to grow their teams just to manage AI tools.

- Continue to monitor: Build dashboards to track agent performance and intervene when necessary.

What’s Next: A Glimpse Into the Future

The future of AI Agent Frameworks is exciting. We’re not far from agents that can learn from each other, negotiate tasks, and even improve their code.

Big players like Google, Meta, and OpenAI are already building multi-agent systems—digital teams that can collaborate and reason together. Imagine agents that brainstorm together, correct each other’s mistakes, or work in shifts to keep a business running smoothly.

And it’s not just tech hype. The earlier your business starts learning, the bigger the long-term payoff.

Conclusion

AI Agent Frameworks aren’t just another shiny tool—they represent a whole new way of thinking about automation, intelligence, and business growth. These AI Agent frameworks give you the power to build digital workers that think, act, and adapt independently.

But success isn’t guaranteed. You’ll need a clear plan, cross-team collaboration, and a willingness to learn as you go. The companies that start small, move fast, and build responsibly will be the ones leading their industries in the years to come.

So, if you’re a business leader looking to future-proof your strategy, now’s the time to explore what AI Agent Frameworks can do for you.

FAQs

1. What is an AI Agent Framework in simple terms?

It’s a toolkit that helps you build intelligent software agents capable of making decisions, learning, and interacting with systems, much like a virtual teammate.

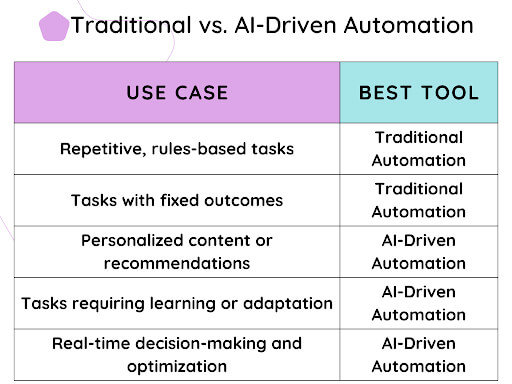

2. How are AI Agent Frameworks different from traditional AI tools?

Traditional AI responds to inputs. AI Agent Frameworks enable agents to plan, work independently, and collaborate with users or systems.

3. Are these frameworks only for tech companies?

Not at all. Any business—from retail to finance—can benefit by automating workflows, improving customer service, or optimizing operations.

4. What should I do before implementing an AI Agent Framework?

Start with a pilot project, choose the right framework for your needs, set up proper governance, and invest in training your team.

How Can [x]cube LABS Help?

At [x]cube LABS, we craft intelligent AI agents that seamlessly integrate with your systems, enhancing efficiency and innovation:

- Intelligent Virtual Assistants: Deploy AI-driven chatbots and voice assistants for 24/7 personalized customer support, streamlining service and reducing call center volume.

- RPA Agents for Process Automation: Automate repetitive tasks like invoicing and compliance checks, minimizing errors and boosting operational efficiency.

- Predictive Analytics & Decision-Making Agents: Utilize machine learning to forecast demand, optimize inventory, and provide real-time strategic insights.

- Supply Chain & Logistics Multi-Agent Systems: Enhance supply chain efficiency by leveraging autonomous agents that manage inventory and dynamically adapt logistics operations.

- Autonomous Cybersecurity Agents: Enhance security by autonomously detecting anomalies, responding to threats, and enforcing policies in real-time.

- Generative AI & Content Creation Agents: Accelerate content production with AI-generated descriptions, visuals, and code, ensuring brand consistency and scalability.

Integrate our Agentic AI solutions to automate tasks, derive actionable insights, and deliver superior customer experiences effortlessly within your existing workflows.

For more information and to schedule a FREE demo, check out all our ready-to-deploy agents here.

1-800-805-5783

1-800-805-5783