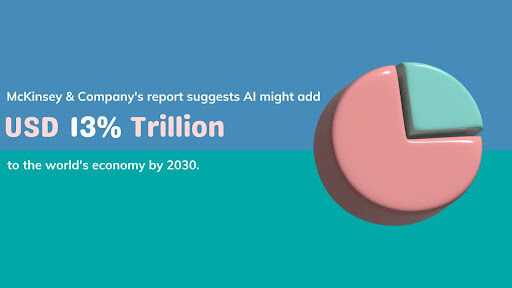

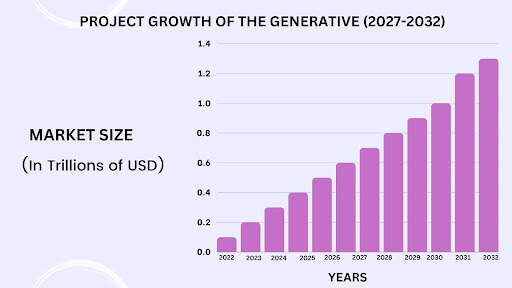

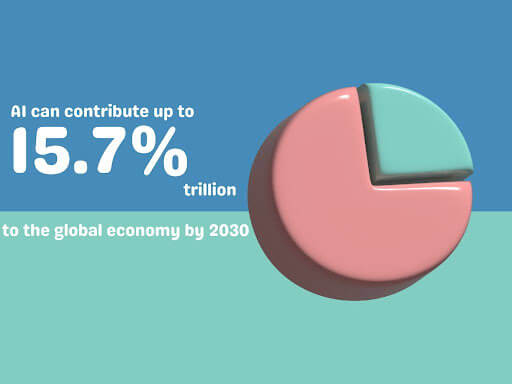

Think about a world where your bank could process millions of transactions in seconds, spot fake activity before it happens, and give you financial advice that’s just right for you. It’s the future of finance powered by Artificial Intelligence – AI in Finance.

AI in Finance is like a highly skilled digital assistant with extensive data analysis capabilities. It can identify hidden patterns and automate routine tasks. This digital assistant role should support and guide you in your financial decisions.

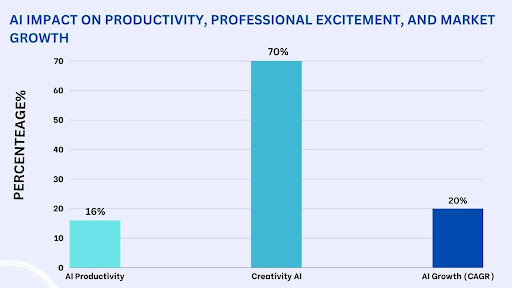

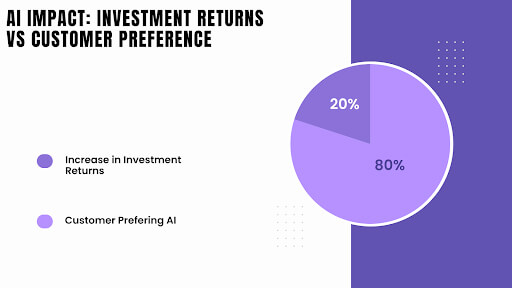

For example, a large investment bank recently used AI in Finance to explore over 100 million data points to identify potential market anomalies, resulting in a 20% increase in investment returns.

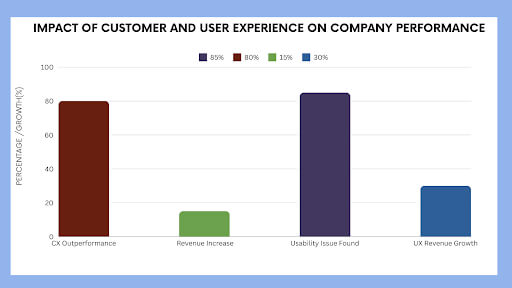

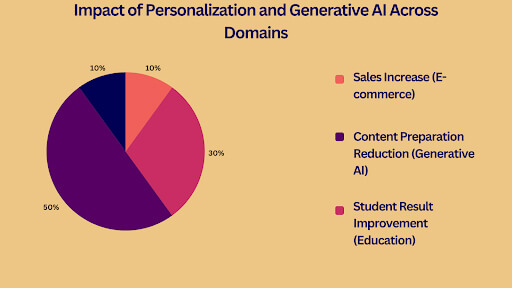

But Generative AI in Finance isn’t just about efficiency; it also involves enhancing your experience. AI-driven chatbots and virtual assistants can offer individualized client service 24/7, ensuring you always have the help you need whenever you need it. A recent survey found that 80% of customers prefer interacting with AI-powered virtual assistants over human representatives.

Benefits of AI in Financial Processes

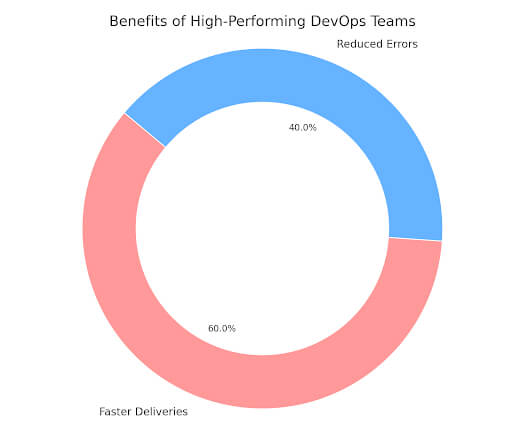

- Enhanced efficiency: Automating repetitive tasks leads to faster turnaround times and reduced operational costs.

- Improved accuracy: Large volumes of data can be processed by AI in finance algorithms with little error, lowering the possibility of human error.

- Risk mitigation: AI-powered fraud detection systems can identify suspicious activities, safeguarding financial institutions and customers.

- Enhanced customer experience: AI-powered chatbots and virtual assistants have the potential to increase customer happiness by offering individualized service.

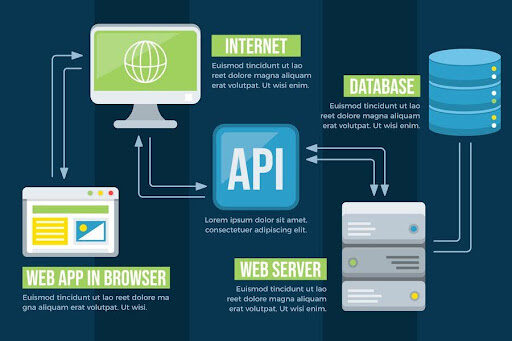

Applications of AI in Financial Processes

Artificial Intelligence in Finance is transforming the financial sector in sweeping dimensions with innovative solutions for traditional challenges. Advanced algorithms and techniques regarding machine learning enhance efficiency, reduce risks, and improve customer experiences for financial institutions.

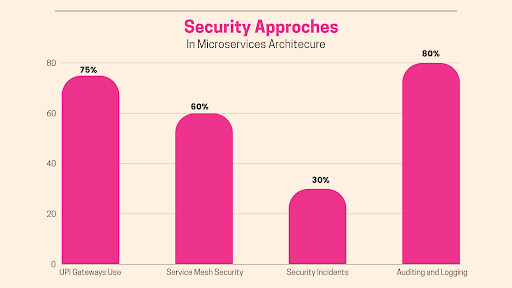

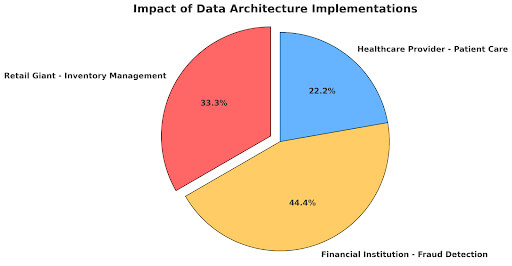

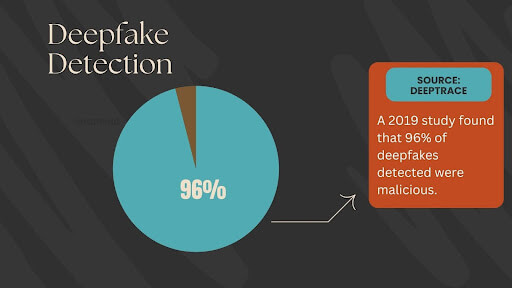

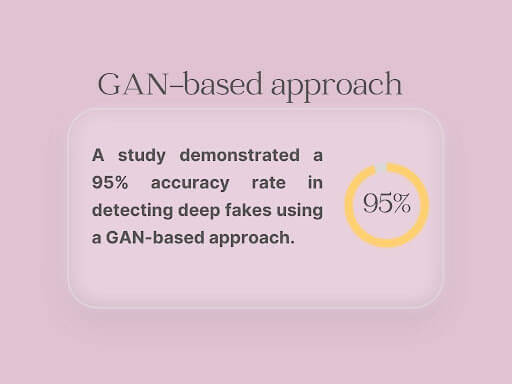

- Fraud detection: Among the most important uses of AI in finance is fraud detection. AI can analyze large transaction databases using finance algorithms. Subsequently, it might be utilized to identify patterns and anomalies that could indicate fraud.

For example, a significant bank recently implemented an AI-powered fraud detection system that identified and prevented over $1 billion in fraudulent transactions in a year. - Credit risk assessment: AI in Finance is also revolutionizing credit risk assessment. AI models can provide more accurate and comprehensive credit risk assessments by analyzing a borrower’s financial history, social media activity, and other relevant data.

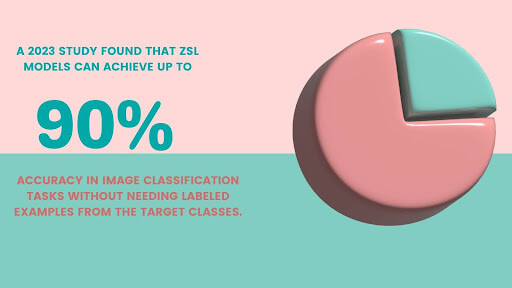

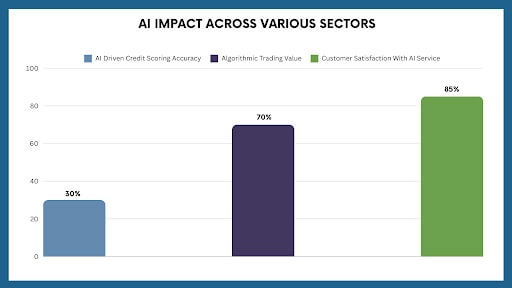

This reduces the likelihood of bad loans and enables lenders to offer more tailored financial products. A recent study by McKinsey found that AI-driven credit scoring models can improve prediction accuracy by up to 30% compared to traditional credit scoring methods. - Algorithmic trading: Algorithmic trading, powered by AI, is another area where the technology is making a significant impact. These algorithms can detect trading opportunities, evaluate enormous volumes of real-time data, and conduct deals as profitably as possible.

A study by the Boston Consulting Group estimated that algorithmic trading accounts for more than 70% of all equity trading volume. - Customer service: AI in Finance also enhances customer experiences in the financial sector through chatbots and virtual assistants.

These AI-driven systems can handle routine customer inquiries, provide personalized recommendations, and even assist with complex tasks. A survey by PwC found that 85% of customers are satisfied with their interactions with AI-powered customer service agents.

- Regulatory compliance: Finally, Financial organizations can benefit from AI in finance by navigating the challenging world of regulatory compliance. By automating compliance tasks, such as reporting, monitoring, and risk assessment, AI in Finance can reduce the burden on compliance teams and minimize the risk of non-compliance.

Additionally, AI in Accounting and Finance can help identify potential regulatory breaches early on, allowing institutions to take proactive measures to mitigate risks.

Case Studies: Successful Implementations of AI in Finance

By leveraging AI’s capabilities, financial institutions have streamlined operations, enhanced decision-making, and improved customer experiences. Let’s explore real-world examples of successful AI Finance implementations in finance.

Case Study 1: JPMorgan Chase’s Contract Intelligence (COIN)

JPMorgan Chase, one of the world’s largest financial institutions, pioneered using AI in Finance for contract analysis with its Contract Intelligence (COIN) system. This AI-powered platform can review and understand legal documents in seconds, a task that traditionally took human lawyers hours or even days.

By automating this process, COIN has significantly increased efficiency and reduced costs for JPMorgan Chase. According to the bank, COIN can process 12,000 documents per hour, allowing lawyers to focus on more complex tasks.

Case Study 2: Bank of America’s Erica Virtual Assistant

Bank of America’s Erica is a groundbreaking AI-powered virtual assistant that provides customers with personalized AI in banking and finance services. Erica can help with various tasks, such as moving money, paying payments, and verifying account balances.

The introduction of Erica has led to a significant improvement in customer satisfaction at Bank of America. Consumers value the effectiveness and ease of communicating with their bank via brief talk around the clock.

Case Study 3: Goldman Sachs’s AI-Driven Trading Platform

Goldman Sachs, a leading investment bank, has developed an AI-driven trading platform that executes trades faster and more accurately than human traders. This platform analyzes data using machine learning methods to identify profitable trading opportunities.

Goldman Sachs’s AI in Finance trading platform has increased profitability and reduced risk for the bank. By automating the trading process, the bank has been able to capitalize on market trends more effectively and minimize losses.

Challenges and Considerations

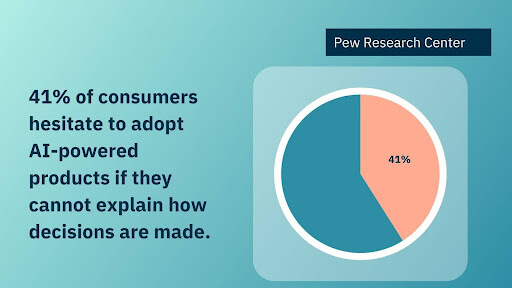

AI in Finance has revolutionized financial process automation, from fraud detection to personalized investment advice. However, fast adoption has also thrown up many challenges and issues that must be addressed seriously.

Data Quality and Privacy: The Necessity of AI

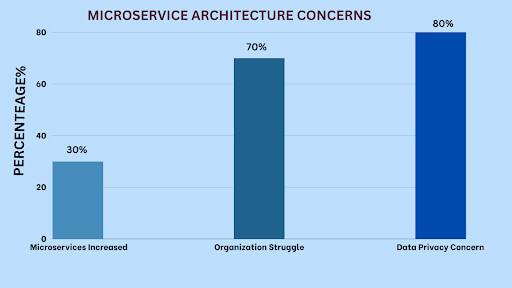

Data quality forms the backbone of AI applications. Data quality remains essential in finance, where high accuracy and precision are required. Inconsistencies, missing values, and outliers can drastically impair the functioning of AI models.

Some other significant concerns involve privacy. Financial institutions handle the most sensitive customer data; a breach can have devastating consequences. Therefore, the most important thing is installing robust security measures and strictly adhering to data privacy policies such as GDPR.

As McKinsey cites, 70% of financial institutions found that improving data quality is essential for the success of AI in Finance.

Ethical Issues: Navigating the Moral Compass

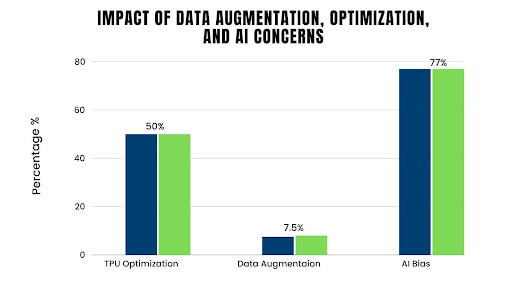

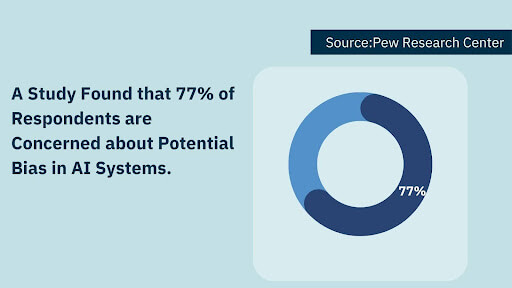

AI in Finance can exhibit bias and cause discrimination. Algorithms that learn from skewed data are more likely to continue this trend. For instance, a credit scoring model that denies a disproportionate number of loan applications to people from specific demographics could further create financial disparities.

Again, job loss is another ethical issue. As AI in Finance replaces traditional manual work, it may lead to job loss. Essential strategies must be devised to absorb losses, including training workers for other new activities.

According to a recent report by PwC, AI in Finance will create up to 12 million new jobs by 2030 and replace 7.7 million jobs.

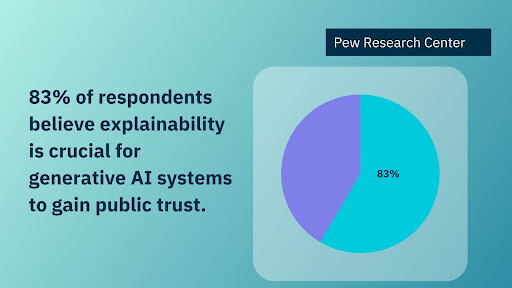

Integration and Implementation: Connecting the Dots

AI solutions integrated into existing financial systems create challenges. Technical barriers include compatibility issues and legacy systems. The safety and reliability of such AI-driven systems in Finance must also be assured.

Implementing such processes takes work, careful thought, and proper action. To establish this new track of AI in Finance adoption, financial institutions would need to invest in talent, infrastructure, and governance.

According to an Accenture survey, 83% of financial services executives believe AI will fundamentally change the nature of their industry.

It has immense scope for improving efficiency and effectiveness in financial institutions. However, data quality, privacy, ethics, and integration challenges must be addressed before AI in Finance can fully reap its benefits. By navigating these intricacies with care, the financial industry can tap into AI’s power to advance an innovative, inclusive, and sustainable future for all.

Conclusion

In conclusion, AI in Finance is poised to play a pivotal role in the future of finance. By leveraging AI’s power, financial institutions can enhance efficiency, reduce risks, improve customer experiences, and stay ahead of the competition. As AI in Finance technology evolves, we expect to see even more innovative applications in the financial sector.

As AI in Finance continues to evolve, its potential to transform the financial industry is immense. Financial institutions can improve operational efficiency by embracing AI and gaining a competitive edge. This transformation should make you feel excited about the future of finance.

The future of finance will likely be characterized by a seamless integration of AI into every aspect of the business, from back-office operations to front-line customer interactions. And while AI in Finance will undoubtedly play a crucial role, it’s important to remember that it’s a tool to empower humans, not replace them.

FAQ’s

What are the gains of using AI in finance?

AI benefits the finance industry in several ways, including:

- Improved efficiency: Automating data analysis and customer service tasks can significantly reduce operational costs.

- Enhanced decision-making: Artificial intelligence (AI) can examine giant data sets to find trends and patterns humans might miss, enabling more informed decision-making.

- Personalized customer experiences: AI-powered solutions can offer personalized financial recommendations and advice based on user needs and preferences.

- Increased security: AI can help detect and prevent fraud by identifying suspicious activity and anomalies in financial transactions.

What are the potential risks associated with AI in finance?

While AI offers many benefits, it also presents some risks, such as:

- Bias: If AI algorithms are trained on biased data, they may perpetuate inequalities and discrimination.

- Job displacement: As AI automates tasks, there is a risk of job losses in the financial industry.

- Privacy concerns: Handling sensitive financial data raises concerns about privacy and security.

How can financial institutions address the ethical concerns surrounding AI?

Financial institutions can address ethical concerns by:

- Ensuring data quality and fairness: Using unbiased data to train AI models and regularly evaluating them for bias.

- Developing ethical guidelines: Establishing clear guidelines for AI development and use, including principles of fairness, transparency, and accountability.

- Investing in education and training: Training employees on ethical AI practices and the potential risks.

Which financial applications of AI are there, for instance?

AI is being applied in several financial domains, such as:

- Fraud detection: Identifying suspicious activity in financial transactions.

- Risk management: Assessing risk and optimizing investment portfolios.

- Customer service: Offering tailored financial guidance and assistance.

- Trading: Executing trades at optimal times and prices.

- Credit scoring: Evaluating the creditworthiness of individuals and businesses.

How can [x]cube LABS Help?

[x]cube has been AI-native from the beginning, and we’ve been working with various versions of AI tech for over a decade. For example, we’ve been working with Bert and GPT’s developer interface even before the public release of ChatGPT.

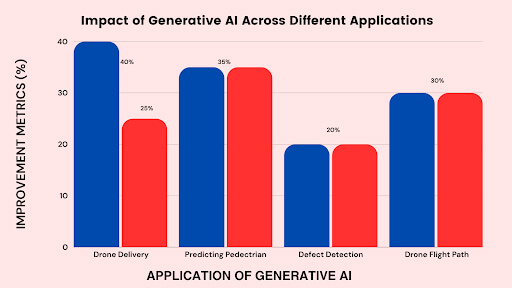

One of our initiatives has significantly improved the OCR scan rate for a complex extraction project. We’ve also been using Gen AI for projects ranging from object recognition to prediction improvement and chat-based interfaces.

Generative AI Services from [x]cube LABS:

- Neural Search: Revolutionize your search experience with AI-powered neural search models. These models use deep neural networks and transformers to understand and anticipate user queries, providing precise, context-aware results. Say goodbye to irrelevant results and hello to efficient, intuitive searching.

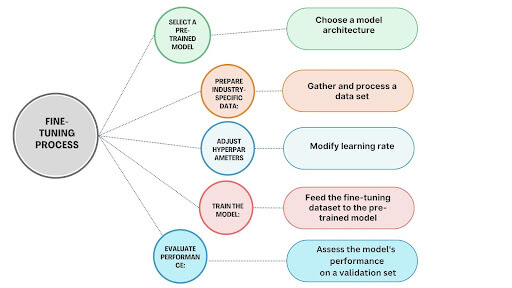

- Fine Tuned Domain LLMs: Tailor language models to your specific industry for high-quality text generation, from product descriptions to marketing copy and technical documentation. Our models are also fine-tuned for NLP tasks like sentiment analysis, entity recognition, and language understanding.

- Creative Design: Generate unique logos, graphics, and visual designs with our generative AI services based on specific inputs and preferences.

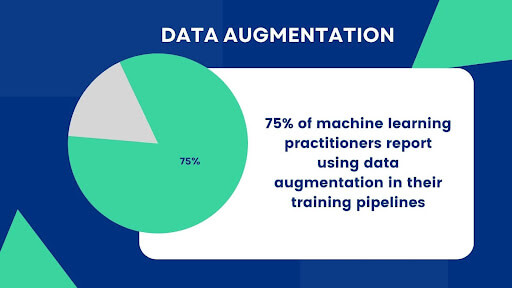

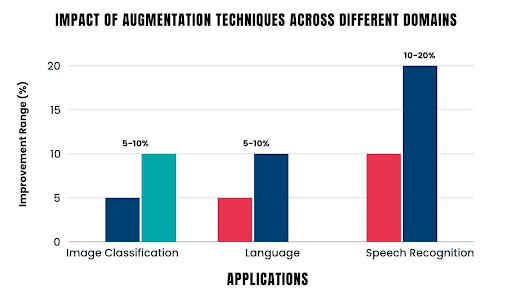

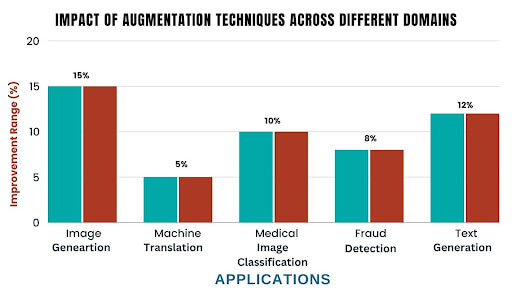

- Data Augmentation: Enhance your machine learning training data with synthetic samples that closely mirror accurate data, improving model performance and generalization.

- Natural Language Processing (NLP) Services: Handle sentiment analysis, language translation, text summarization, and question-answering systems with our AI-powered NLP services.

- Tutor Frameworks: Launch personalized courses with our plug-and-play Tutor Frameworks that track progress and tailor educational content to each learner’s journey, perfect for organizational learning and development initiatives.

Interested in transforming your business with generative AI? Talk to our experts over a FREE consultation today!

1-800-805-5783

1-800-805-5783