AI has many uses in scientific research. It helps us analyze vast amounts of information, spot trends, and guess what might happen next, opening up new ways to explore science. In the last few years, people have developed generative AI, a part of AI that can create new things, such as music, pictures, and writing.

This potential of generative AI will accelerate scientific discovery and innovation, as it can assist in generating novel hypotheses, designing experiments, and complex data analysis.

Applications of Generative AI in Scientific Research

Drug Discovery and Development.

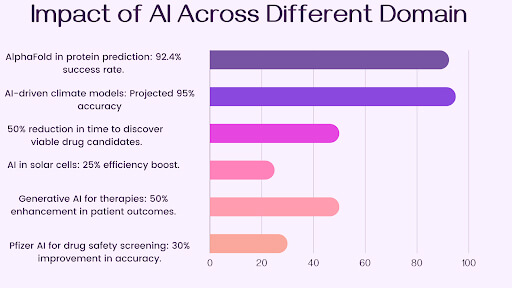

Cutting-edge AI in scientific research can help scientists create new drug molecules with unique shapes and features, speeding up the process of finding new medicines. For example, according to a 2023 Statista report, Pfizer’s use of AI for drug safety screening improved accuracy by 30%.

Spotting How Drugs Interact: AI can determine how different drugs might affect each other. Analyzing vast amounts of data can make drugs safer for patients.

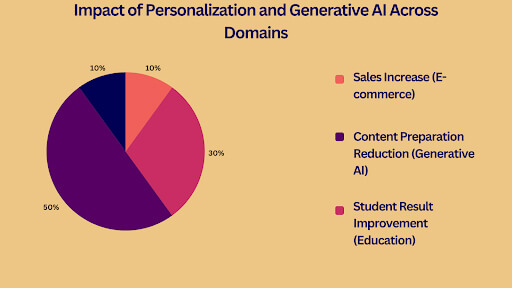

Designing Personalized Treatment Plans: AI can create personalized treatment plans incorporating patient data, resulting in more efficient and targeted treatments. Generative AI will tailor therapies, improving patient outcomes by up to 50%, as projected by McKinsey.

Materials Science.

Material discovery: AI in scientific research enables deep searches of the vast chemical space to find novel materials with desired properties. Examples include superconducting materials and better batteries.

Optimizing Material Properties. AI enhances the efficiency of existing materials. For example, an MIT study showed that AI improved solar cell performance by 25%.

Accelerating Material Development: AI in scientific research can predict experimental and simulation outcomes, rapidly creating new materials. According to a 2022 Nature article, generative AI reduced the time for discovering viable drug candidates by 50%.

Climate Science.

Advanced AI in scientific research can enable researchers to create novel drug molecules with molecular shapes and properties, improving drug discovery efficiency. According to the IPCC, AI-driven models could achieve 95% accuracy in climate scenario predictions by 2035.

Identification of Drug Interactions: This can significantly improve patient safety. Sifting through reams of data may identify a potential risk not immediately apparent. It could make a real difference in how we approach medication safety!

Developing Climate Mitigation Strategies: AI in scientific research may identify and assess potential climate mitigation initiatives like carbon capture and storage or renewable energy technologies. Besides,

Bioinformatics.

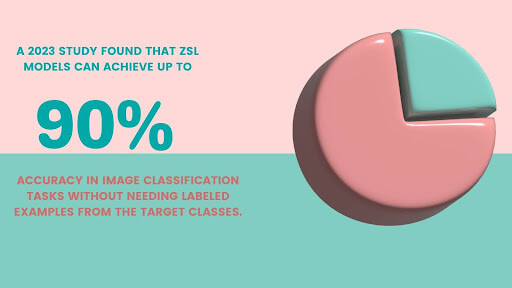

AI-based predictions of protein structure are a tool of primary concern for understanding protein function and drug development since they can predict the behavior of particular components. Generative AI models like AlphaFold achieved a 92.4% success rate in predicting protein structures, revolutionizing drug development.

Genome Analysis: Machine learning can analyze genomic data, determine genetic variations between species, and, based on that, make customized therapies possible.

Drug Target Identification: AI in scientific research uses various biological functions to examine potential drug targets, including protein-protein interactions.

Generative AI enables scientists to speed up research, uncover fresh findings, and address significant global challenges.

Scientific Research: What are the drawbacks and obstacles of using Generative AI?

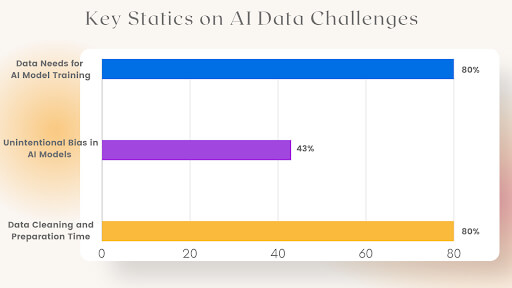

1. Data Quality and Quantity

Data Scarcity:

Many scientific fields need more metadata quality to develop effective generative AI models.

Data Bias:

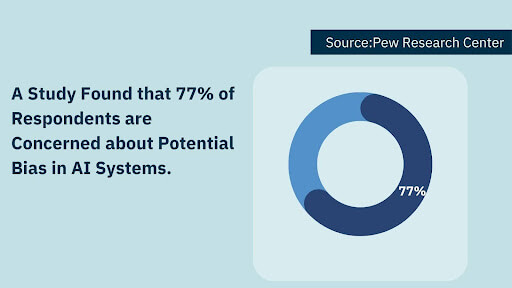

The training data is biased, which means that models cannot be generalized and can, therefore, not be accurate.

Data Noise:

Misleading and reliable information helps the modeling process and can result in errors in forecasting. E.g.

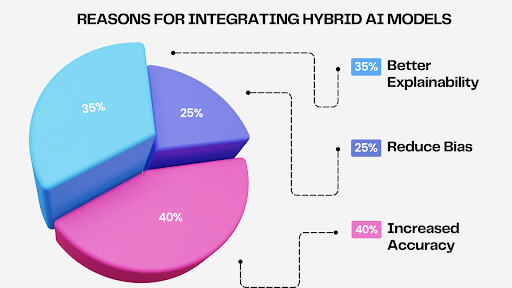

2. Model Bias and Fairness.

Algorithmic Bias:

Biased and discriminating training data can provide generative AI models with unjust results.

Fairness and Equity

This is the most critical factor concerning AI models. Their importance in healthcare and criminal justice lies in being inclusive and responsive to all stakeholders.

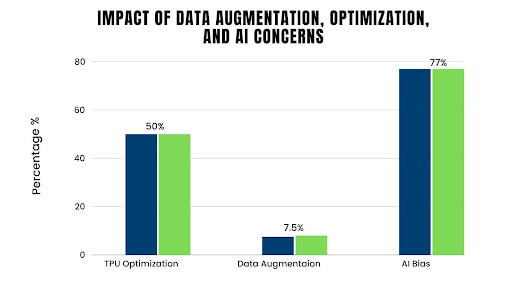

3. Computational Resources.

High Computational Cost

Generative AI models require significant hardware and software resources for large-scale deployment, especially computational power.

Scalability:

Scaling up to large datasets and complex tasks is challenging for generative AI models. Why?

4. Interpretability and Explainability.

Black-Box Nature:

Many generative AI models, intense neural networks (DLN), are considered black boxes, and their decision-making processes can be challenging to interpret.

Trust and Transparency:

Sometimes, AI models are uninterpretable and, thus, unsuitable for the critical applications of healthcare and finance.

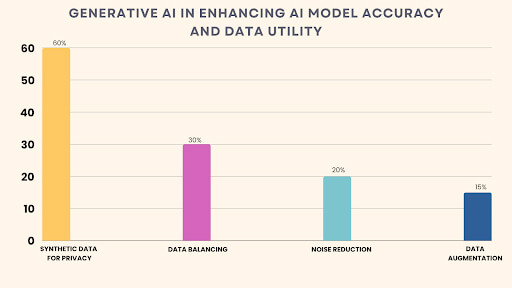

We must address these challenges to make generative AI workable in scientific research. Researchers must develop robust methods for data culling, model training, and testing to ensure AI’s ethical usage and fidelity.

The Future of Generative AI in Scientific Research

In Scientific research, AI is about to undergo a revolution because of generative AI. As the technology continues to modify, we can expect to see even more groundbreaking applications:

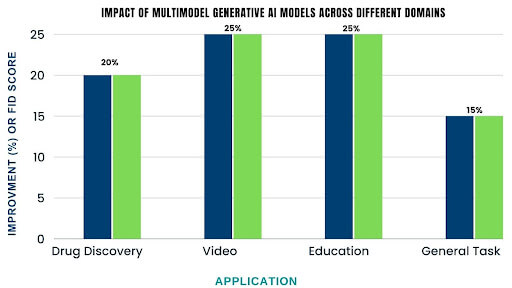

- Multimodal Generative Models: They can produce data of any type, including text, images, and audio, which could provide scientists with more comprehensive views.

- AI-powered scientific discovery: such generative AI can automatically generate hypotheses, design experiments, and analyze data, speeding up AI in scientific research in its wake.

- Personalized Medicine: AI in scientific research will be able to create an individualized therapy plan for each patient, ensuring that treatments are effective and in the right place.

- Material Science: AI in scientific research could introduce new, more robust, conductive materials in scientific research.

- Climate Science: AI in scientific research can model complex systems in climate science, enabling the prediction of future climate scenarios.

Ethical Considerations

As generative AI grows increasingly powerful, ethical issues must be addressed:

- Bias and Fairness: Proper training data on diverse, unbiased issues should ensure that AI models do not produce discriminatory outcomes.

- Intellectual Property Rights: Rights against content ownership and data generated from AI in scientific research.

- Misinformation and Disinformation: Prevent using generative AI to spread false or misleading information in scientific research.

The Role of Human-AI Collaboration

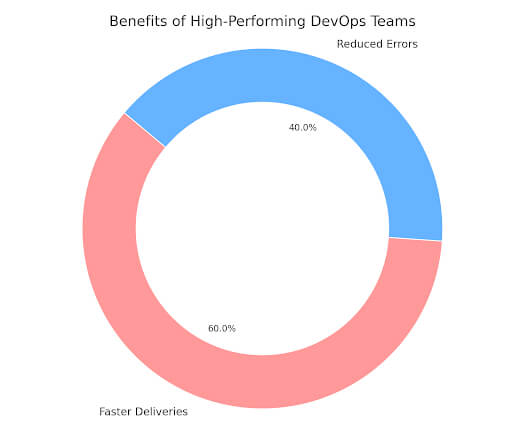

AI in scientific research helps make tasks more efficient, yet working with humans is essential for advancing science. People bring context, creativity, and critical thinking to the table. AI in scientific research is excellent at handling repetitive tasks and analyzing extensive datasets.

- Augmented Intelligence: AI in scientific research can augment human capabilities by giving insights and suggestions.

- Shared Decision-Making: Humans and AI can make an informed decision decision together.

- Ethical Over-Sight: Humans need to oversee the designing and deployment of AI for ethical and responsible utilization.

By integrating generative AI and human collaboration, scientists can make scientific progress faster and have more new areas of inquiry.

Conclusion

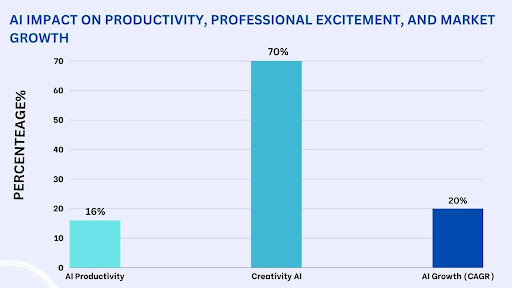

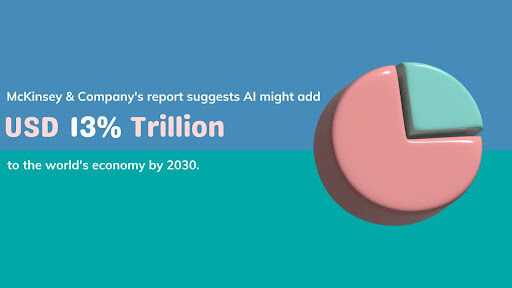

Generative AI influences how we approach discovery and innovation. It accelerates research, enables creativity at a new level, and makes possible breakthroughs that had previously appeared unreachable.

AI in scientific research helps researchers explore complex data sets, uncover new insights, and develop creative solutions to address some of the world’s biggest problems.

Tending to the challenges related to generative AI, such as information quality, inclination, and interpretability, is vital as we move forward. By creating vigorous strategies and moral rules, we will guarantee that AI in scientific research is utilized dependably and viably.

Analysts, researchers, and policymakers must collaborate to cultivate development, share information, and address ethical concerns to realize generative AI’s potential. By grasping AI in scientific research as an effective instrument, we open modern wildernesses of logical revelation and make a much better future for humankind.

FAQs

1. How does generative AI accelerate scientific discovery?

Generative AI accelerates research by generating hypotheses, designing experiments, and analyzing complex datasets. It helps identify patterns, create simulations, and make predictions faster than traditional methods, speeding up innovation.

2. What are some critical applications of generative AI in scientific research?

Generative AI is used in:

- Drug Discovery: Designing new molecules and predicting drug interactions.

- Materials Science: Discovering and optimizing materials for specific purposes.

- Climate Science: Modeling and predicting climate scenarios.

- Bioinformatics: Analyzing genomic data and identifying drug targets.

3. What challenges does generative AI face in scientific research?

Key challenges include:

- Data scarcity, bias, and noise impacting model accuracy.

- High computational costs for model training and deployment.

- Limited interpretability of AI decisions (black-box nature).

- Ethical concerns, such as intellectual property and misinformation.

4. How can generative AI and human collaboration benefit research?

Generative AI handles large datasets and repetitive tasks, while humans bring creativity, context, and ethical oversight. Together, they enhance decision-making, accelerate discovery, and ensure responsible use of AI technologies.

How can [x]cube LABS Help?

[x]cube has been AI native from the beginning, and we’ve been working with various versions of AI tech for over a decade. For example, we’ve been working with Bert and GPT’s developer interface even before the public release of ChatGPT.

One of our initiatives has significantly improved the OCR scan rate for a complex extraction project. We’ve also been using Gen AI for projects ranging from object recognition to prediction improvement and chat-based interfaces.

Generative AI Services from [x]cube LABS:

- Neural Search: Revolutionize your search experience with AI-powered neural search models. These models use deep neural networks and transformers to understand and anticipate user queries, providing precise, context-aware results. Say goodbye to irrelevant results and hello to efficient, intuitive searching.

- Fine-Tuned Domain LLMs: Tailor language models to your specific industry for high-quality text generation, from product descriptions to marketing copy and technical documentation. Our models are also fine-tuned for NLP tasks like sentiment analysis, entity recognition, and language understanding.

- Creative Design: Generate unique logos, graphics, and visual designs with our generative AI services based on specific inputs and preferences.

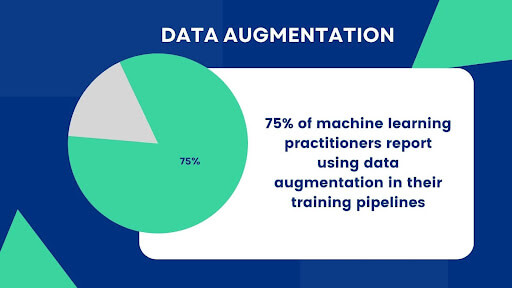

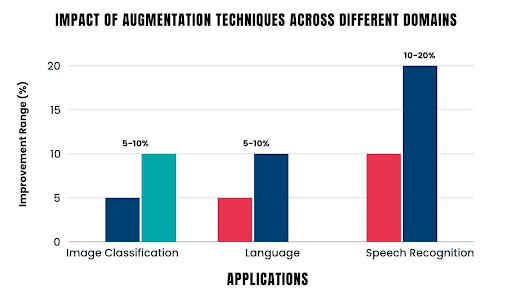

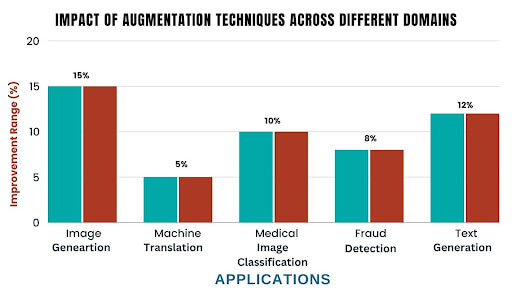

- Data Augmentation: Enhance your machine learning training data with synthetic samples that closely mirror accurate data, improving model performance and generalization.

- Natural Language Processing (NLP) Services: Handle sentiment analysis, language translation, text summarization, and question-answering systems with our AI-powered NLP services.

- Tutor Frameworks: Launch personalized courses with our plug-and-play Tutor Frameworks, which track progress and tailor educational content to each learner’s journey. These frameworks are perfect for organizational learning and development initiatives.

Interested in transforming your business with generative AI? Talk to our experts over a FREE consultation today!

1-800-805-5783

1-800-805-5783