1-800-805-5783

1-800-805-5783

Multimodal generative AI models are revolutionizing artificial intelligence. They can process and create data in different forms, including text, images, and sound. These multimodal AI models impact new opportunities in many areas. By combining these various data types, they can be used to create creative content and solve complex problems.

A study by Microsoft Research demonstrated that using GANs to generate synthetic images can improve the accuracy of image classification models by 5-10%. This is because GANs can develop highly realistic images that augment the training dataset, helping models learn more robust and generalizable features.

Multimodal generative AI models are revolutionizing artificial intelligence. They can process and create data in different forms, including text, images, and sound. These multimodal AI models impact new opportunities in many areas. By combining these various data types, they can be used to create creative and solve complex problems. This is because GANs can develop highly realistic images that augment the training dataset, helping models learn more robust and generalizable features.

This blog post examines the main parts and hurdles in building multimodal AI models that can work with multiple input types. We’ll discuss the methods used to show and mix different kinds of data, what this tech can do, and where it falls short.

Combining multiple modalities influences the capabilities of generative AI models. These multimodal AI models can do the following by using information from different sources:

Multimodal generative AI describes a group of AI systems that can produce content in different forms, like words, pictures, and sounds. These systems use methods from natural language processing, computer vision, and sound analysis to create outputs that seem accurate and complete.

Models that generate content using multiple types of input (like text, pictures, and sound) impact AI. These multimodal AI model systems create more detailed and valuable results. To pull this off, they depend on a few essential parts:

This robust language model grasps context and meaning links in the text. These multimodal AI models can create text that sounds human. This newer design borrows ideas from language processing. It shows promise in recognizing and making images. People use CNNs a lot to identify and classify images. Vision Transformers have become more prevalent in recent years because they perform better on some benchmarks. A speech recognition model that relies on deep neural networks.

Text Representation Models

BERT (Bidirectional Encoder Representations from Transformers): This robust language model grasps context and meaning links in the text.

GPT (Generative Pre-trained Transformer): These multimodal AI models can create text that sounds human.

BERT and GPT lead the pack in many language tasks. They excel at sorting text, answering questions, and making new text.

Image Representation Models

CNNs (Convolutional Neural Networks): These networks work well with pictures.

Vision Transformers: This newer design borrows ideas from language processing. It shows promise in recognizing and making images.

People use CNNs a lot to recognize and classify images. Vision Transformers have become more prevalent in recent years because they perform better on some benchmarks.

DeepSpeech and WaveNet have shown remarkable outcomes in speech recognition and audio synthesis tasks, respectively.

Studies have shown that the fusion technique you choose can significantly impact how well multimodal generative AI models perform. You often need to try out different methods to find the best one.

A study by Stanford University found that 85% of existing multimodal datasets suffer from data imbalance, impacting model performance.

Research has shown that 30-40% of errors in multimodal systems can be attributed to misalignment or inconsistency between modalities.

Training a state-of-the-art multimodal model can require 100+ GPUs and 30+ days of training time. This highlights the significant computational resources necessary to develop these complex models.

A study by the Pew Research Center found that 55% of respondents expressed concerns about privacy and bias in multimodal AI model systems.

Research from Stanford University showed that data augmentation methods can boost the effectiveness of multimodal models by 15-20%, demonstrating their efficacy in enhancing their robustness and generalization capabilities.

Research shows CNNs perform well in classifying images. At the same time, models based on transformers have proven effective in processing natural language.

A study by Google AI demonstrated that joint embedding techniques can improve the performance of multimodal models, especially for tasks that require understanding the relationships between different modalities.

For example, joint embedding can be used to learn common representations for text and images, enabling the model to effectively combine information from both modalities to perform tasks like image captioning or visual question answering.

By carefully selecting and combining these techniques, researchers can build powerful multimodal AI models that can effectively process and generate data from multiple modalities.

Healthcare:

Entertainment:

Education:

Benefits:

Limitations:

A study by MIT found that multimodal models can improve task accuracy by 10-20% compared to unimodal models.

By tackling these hurdles and making the most of multimodal generative AI’s advantages, experts and programmers can build solid and groundbreaking tools for many different fields.

Recent research has shown that graph-based multimodal models can improve performance on tasks such as visual question answering by 5-10%. Graph-based models can effectively capture the relationships between different modalities and reason over complex structures, leading to more accurate and informative results.

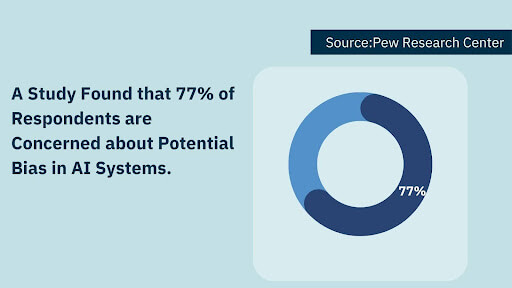

A study by the Pew Research Center found that 77% of respondents are concerned about potential bias in AI systems.

According to a report by Grand View Research, the global market for multimodal AI models is expected to reach $6.2 billion by 2028. This significant growth stems from the rising need for AI-powered answers to handle and grasp data from many places.

By tackling these issues and adopting new trends, scientists and coders can tap into the full power of multimodal generative AI and build game-changing apps in many fields.

Multimodal AI model has an impact on artificial intelligence. It has the potential to create systems that are smarter, more flexible, and more human-like. Combining information from different sources allows these models to understand complex relationships and produce more thorough and meaningful results.

As scientists continue to work on multimodal AI, we’ll see more groundbreaking uses across many fields. The possibilities range from custom-tailored medical treatments to enhanced reality experiences.

Yet, we must tackle the problems with multimodal AI models, such as the need for more data, the complexity of calculations, and ethical issues. By focusing on these areas, we can ensure that as we develop multimodal generative AI, we do it in a way that helps society.

To wrap up, multimodal generative AI shows great promise. It can change how we use technology and tackle real-world issues. If we embrace this tech and face its hurdles head-on, we can build a future where AI boosts what humans can do and improves our lives.

1. What is a multimodal generative AI model?

A multimodal generative AI model integrates different data types (text, images, audio) to generate outputs, enabling more complex and versatile AI-generated content.

2. How do multimodal AI models work?

These models process and combine information from multiple data formats, using machine learning techniques to understand context and relationships between text, images, and audio.

3. What are the key benefits of multimodal generative AI?

Multimodal AI can produce more prosperous, contextual content, improve user interactions, and enhance applications like content creation, virtual assistants, and interactive media.

4. What are the challenges in developing multimodal generative AI models?

Key challenges include:

5. Which industries benefit from multimodal AI models?

Industries like healthcare, entertainment, marketing, and education use multimodal AI for applications such as virtual assistants, content creation, personalized ads, and immersive learning experiences.

6. What technologies are used in multimodal generative AI?

Technologies like deep learning, transformers (GPT), convolutional neural networks (CNNs), and attention mechanisms are commonly used to develop multimodal AI models.

[x]cube has been AI-native from the beginning, and we’ve been working with various versions of AI tech for over a decade. For example, we’ve been working with Bert and GPT’s developer interface even before the public release of ChatGPT.

One of our initiatives has significantly improved the OCR scan rate for a complex extraction project. We’ve also been using Gen AI for projects ranging from object recognition to prediction improvement and chat-based interfaces.

Interested in transforming your business with generative AI? Talk to our experts over a FREE consultation today!