1-800-805-5783

1-800-805-5783

Real-time generative AI, which creates content on the spot, has many uses. It powers customer service chatbots and helps make creative content, showing how flexible it can be. We need to know what it can and can’t do to make the most of real-time generative AI applications. This balanced view helps us use it to develop new and exciting ways to use it.

In this blog post, we’ll look at the main ideas behind real-time generative AI, what’s good about it, what problems it faces, and how different industries use it.

Latency and Response Time

Real-time apps need quick responses. A Generative AI application that creates content when it needs to do complex math can slow things down and make real-time use tricky.

Ways to speed things up: Making models smaller, cutting out unnecessary parts, and using special hardware can help speed up responses.

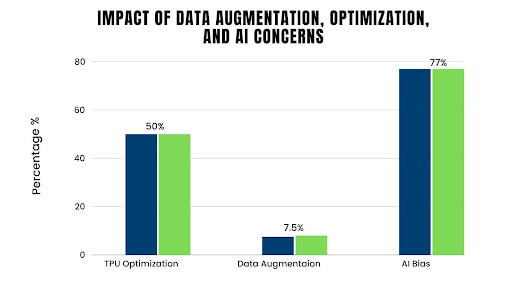

A study found that optimizing a large-scale generative AI model for TPUs could reduce inference time by 40-60%.

Computational Resources

Resource-hungry models: Generative AI applications making new, significant content need much computing power to learn and work.

More hardware: Limits on available computers (CPUs, GPUs, TPUs) can limit the size and complexity of real-time AI apps.

Using the cloud: Tapping into cloud platforms gives access to more computing power when needed. A study by OpenAI estimated that training a large-scale generative AI model can require thousands of GPUs.

Data Limitations

Data quality and quantity: The quality and amount of training data significantly impact the performance of generative AI models.

Data privacy: Gathering and using big datasets can make people worry about privacy.

Data augmentation: Methods like augmentation can help overcome data limits and improve models’ performance in different situations.

A study by Stanford University found that using data augmentation techniques can improve the accuracy of image classification models by 5-10%.

Ethical Considerations

Bias and fairness: Generative AI models can continue to pass on biases from their training data, which can lead to unfair or biased outputs.

Misinformation and deepfakes: The fact that generative AI applications can make very real-looking fake content makes people worry about false information and deepfakes.

Transparency and explainability: Understanding how generative AI models make choices is critical to ensuring these systems are responsible and fixing possible biases.

A Pew Research Center survey found that 77% of respondents are concerned about potential bias in AI systems.

Model Optimization

Pruning Is Removing unneeded links and weights from the model to make it smaller and less complex to compute.

Quantization: Lowering the accuracy of number representations in the model to save space and time for calculations.

Distillation: Shifting knowledge from a big, intricate model to a more compact, efficient one.

Hardware Acceleration

GPUs: Graphics Processing Units are processors that work in parallel, speeding up matrix operations and other computations often seen in deep learning.

TPUs: Tensor Processing Units are custom-built hardware for machine learning tasks offering big performance boosts for specific jobs.

Cloud-Based Infrastructure

Scalability: Cloud-based platforms can scale resources fast to meet real-time application needs.

Cost-efficiency: Pay-as-you-go pricing helps optimize costs for changing workloads.

Managed services: Cloud providers offer services to manage machine learning and AI, making it easier to deploy and manage.

Efficient Data Pipelines

Batch processing: This method processes data in batches for better throughput.

Streaming processing: This approach handles data as it comes in real-time.

Data caching: This technique stores often-used data in memory to retrieve it faster.

Optimizing data pipelines can reduce latency by 20-30% and improve real-time performance.

Generative AI applications have an impact on many industries. Here are some standout cases:

When companies in different fields tap into generative AI’s potential, they can find new ways to grow, boost their productivity, and make their customers happier.

A McKinsey & Company report predicts hybrid AI models will make up 50% of AI uses by 2025.

A Pew Research Center poll revealed that 73% of participants worry about AI’s potential misuse for harmful purposes.

A study by McKinsey & Company suggests that AI could add USD 13 trillion to the world economy by 2030.

We must address these challenges and welcome new technologies to ensure that generative AI applications are developed and deployed responsibly and helpfully.

Generative AI applications are a rapidly evolving field with the potential to revolutionize various industries and aspects of society. From creating realistic images and videos to powering natural language understanding and drug discovery, generative AI applications are becoming increasingly sophisticated and diverse.

While challenges exist, such as ethical considerations and computational resources, the benefits of generative AI applications are significant. We can drive innovation, improve efficiency, and address pressing societal challenges by harnessing its power.

As research and development continue to advance, we can expect to see even more groundbreaking applications of generative AI applications in the future. It is essential to embrace this technology responsibly and ensure its development aligns with ethical principles and societal values.

1. What are generative AI applications?

Generative AI applications use algorithms to create new content, such as images, text, or audio. They can be used for tasks like generating realistic images, writing creative content, or even composing music.

2. What are the names of the models used to create generative AI applications?

Some of the most popular models used in generative AI include:

3. What is one thing current generative AI applications cannot do?

While generative AI has made significant strides, it still needs to work on understanding and generating genuinely original ideas. It often relies on patterns learned from existing data and may need help to produce genuinely novel or groundbreaking content.

[x]cube has been AI-native from the beginning, and we’ve been working with various versions of AI tech for over a decade. For example, we’ve been working with Bert and GPT’s developer interface even before the public release of ChatGPT.

One of our initiatives has significantly improved the OCR scan rate for a complex extraction project. We’ve also been using Gen AI for projects ranging from object recognition to prediction improvement and chat-based interfaces.

Interested in transforming your business with generative AI? Talk to our experts over a FREE consultation today!