Microservices architecture, which offers scalability, flexibility, and faster development, has emerged as a game-changer in the constantly changing field of software development. Microservices are challenging; effective testing and deployment plans are essential. But amidst the architectural freedom, a formidable challenge emerges: testing and deploying these independent services in a complex, distributed ecosystem.

This blog equips you with potent strategies to conquer your microservices landscape’s testing and deployment frontiers. It examines the importance of these procedures in guaranteeing the dependability and effectiveness of applications built using microservices. We will develop the significance of these practices in ensuring the reliability and efficiency of microservices-based applications.

The Need for Effective Microservices Testing and Deployment Strategies:

In modern software architecture, adopting microservices has become increasingly prevalent, offering application development flexibility, scalability, and agility. Microservices, by design, comprise numerous independent services that collectively form a cohesive application.

Ensuring each microservice’s reliability, seamless functionality, and harmonious integration demands a specialized testing approach. Microservices testing is essential to validate the individual components, their synergy, and the overall system behavior. Without robust testing strategies, the risk of undetected bugs, integration issues, and performance bottlenecks increases significantly.

In essence, microservices testing and deployment strategies are imperative for maintaining the integrity and functionality of applications built on this architectural paradigm. They enable development teams to identify and rectify issues early in the development lifecycle, abbreviating the likelihood of errors reaching production.

What is Microservices Testing?

Microservices testing is a comprehensive and specialized approach to quality assurance tailored specifically for microservices architecture. In this modern software design paradigm, applications comprise loosely coupled, independently deployable services. This testing is crucial in ensuring the reliability, scalability, and seamless functionality of applications built on microservices.

Role of Microservices Testing in Ensuring Application Reliability

The primary role of microservices testing is to guarantee an application’s overall reliability and performance in a microservices architecture. As microservices operate independently, testing becomes instrumental in identifying potential issues such as service communication failures, data inconsistencies, and integration challenges.

By conducting thorough testing, developers and QA teams can uncover and rectify problems before they escalate, enhancing the application’s dependability.

Microservices Testing Strategies

Microservices Testing Strategies

Microservices testing is critical to ensuring the reliability and functionality of a distributed architecture. Comprehensive testing strategies are essential to optimize the testing process and ensure seamless microservices deployment. Here are vital microservices testing strategies designed to enhance the overall robustness of a microservices-based application.

A. Unit Testing for Microservices

- Testing Individual Microservices Components

- Conducting thorough testing of each microservice in isolation.

- Verifying that individual components perform as expected, addressing specific functionalities.

- Ensuring Isolation and Independence

- Emphasizing the independence of unit tests to prevent dependencies between microservices.

- Isolating the testing environment for each microservice to identify and rectify potential issues early in the development cycle.

B. Integration Testing Across Microservices

- Verifying Interactions Between Microservices

- Assessing the seamless integration of microservices to guarantee effective communication.

- Confirming that data flows smoothly between interconnected microservices without disruptions.

- Addressing Dependency Challenges

- Identifying and testing dependencies between microservices to prevent cascading failures.

- Implementing effective strategies for handling external dependencies and ensuring consistent performance.

C. End-to-End Testing in Microservices Architecture

- Testing the Entire Application Workflow

- Evaluating the end-to-end functionality of the microservices architecture.

- Verifying that the entire workflow, spanning multiple microservices, meets the application’s requirements.

- Identifying and Resolving Cross-Microservices Issues

- Detecting and resolving issues arising from interactions between different microservices.

- Implementing testing scenarios that simulate real-world usage to uncover and address potential cross-microservices challenges.

Continuous Integration and Microservices Testing

The agile spirit of microservices thrives on Continuous Integration and Continuous Delivery (CI/CD). This dynamic duo injects speed, precision, and bug annihilation into your distributed development workflow. Let’s dive into how CI/CD tools microservices test, ensuring lightning-fast deployments and a remarkably resilient microservices ecosystem.

A. Implementing CI for Microservices

- Frequent Code Integration: Break down monolithic development into smaller, atomic commits. Integrate code from all developers into a central repository daily, fostering collaboration and early problem detection.

- Automated Builds and Tests: Don’t let manual processes slow you down. Leverage tools to build and test each microservice automatically every time code is integrated. This rapid feedback loop lets you catch and fix bugs early, often preventing them from becoming deployment disasters.

- Modular Testing Strategies: Embrace the “divide and conquer” approach. A design unit, integration, and end-to-end tests tailored to each microservice and its unique interactions ensure comprehensive coverage without unnecessary redundancy.

- Version Control and Rollbacks: Maintain a clear history of every code change and test result and enable swift rollbacks to stable versions if issues arise after deployment, minimizing downtime and user impact.

B. Automated Testing in CI/CD Pipelines: Your Microservices Guardian Angels

- Unit Testing: Every service is a fortress guarded by robust unit tests. These automated tests isolate and rigorously assess the core functionalities of each microservice, ensuring they operate flawlessly in isolation.

- Integration Testing: Verify how your microservices dance together. Automate tests that simulate inter-service communication and data exchange, identifying potential integration issues before they reach production.

- End-to-End Testing: Craft automated tests that mimic real-world user journeys, encompassing interactions across multiple microservices, and Ensure a seamless and consistent user experience from start to finish.

- Performance Testing: Simulate peak traffic scenarios to evaluate your microservices’ performance under pressure. This proactive approach identifies potential bottlenecks and scalability issues before they impact real users.

C. Rapid Feedback and Efficient Bug Detection

- Real-time Feedback Loops: Integrate testing results, build status into your CI/CD pipeline dashboard, and provide immediate visibility into potential issues, allowing for swift intervention and course correction.

- Automated Bug Reporting: Use tools that automatically identify and report bugs as they are discovered during testing. This accelerates problem resolution and prevents bugs from slipping through the cracks.

- Failure-Focused Workflows: Design your CI/CD pipeline to halt upon test failures. This prevents potentially buggy code from progressing further, minimizes deployment risks, and ensures only quality code reaches production.

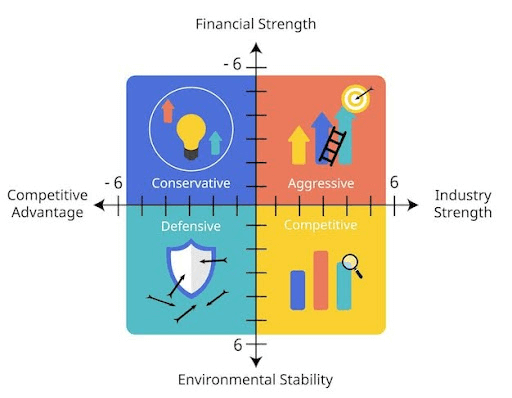

Deployment Strategies for Microservices

A vital component of the contemporary software development environment is microservices deployment, which completely changes how applications are developed, launched, and scaled. Microservices deployment, which has its roots in microservices architecture principles, is a methodology that divides an extensive, complex application into more minor, independently deployable services.

In essence, microservices deployment involves distributing and coordinating these discrete services to form a cohesive, functioning application. Unlike monolithic architectures, where the entire application is a single, interconnected unit, microservices deployment allows each service to operate independently, enabling developers to make changes, updates, and enhancements to specific services without disrupting the entire application.

The optimization of microservices for deployment is grounded in the pursuit of agility, scalability, and fault isolation. By breaking down an extensive application into more minor, manageable services, microservices deployment facilitates rapid development cycles, allowing teams to iterate on individual services without impeding the progress of the entire application.

A. Introduction to Microservices Deployment:

When you deploy a software architect, Microservices necessitate granular deployments catered to individual services, in contrast to monolithic deployments, which roll everything out as one package. This creates new obstacles and opens doors for scalability, agility, and autonomous updates.

B.Critical Aspects of microservices deployment:

- Independence and Isolation: Microservices are independently deployable, meaning updates or changes to one service don’t impact others. This fosters fault isolation, enabling seamless updates without causing disruptions across the entire application.

- Containerization and Orchestration: Microservices are often containerized using tools like Docker, providing a lightweight and consistent runtime environment. Container orchestration tools, such as Kubernetes, manage these containers’ deployment, scaling, and operation, ensuring efficient resource utilization.

- Continuous Integration and Continuous Deployment (CI/CD): microservices deployment thrives on the principles of CI/CD, automating the integration and deployment processes. This allows for swift and frequent releases, ensuring that changes can seamlessly be rolled out to production.

- Scalability: Microservices empower scalable deployments, allowing individual services to scale independently based on demand. This flexibility in scaling ensures optimal resource utilization and responsiveness.

C. Containerization and Microservices:

Each container packages a microservice with dependencies, creating a standardized, self-sufficient unit ready for seamless deployment across any environment. This containerization magic streamlines deployments facilitates scaling, and makes your microservices ecosystem portable.

D. Blue-Green Deployment for Microservices:

Blue-green deployment involves running your current production version (blue) alongside a new version (green) in staging. Once thoroughly tested and validated, you seamlessly switch traffic to the green version, minimizing downtime and rollback risks. This strategy is particularly ideal for critical services where zero downtime is essential.

E. Canary Deployment Strategy:

Canary deployments operate akin to microservices deployment, strategically introducing a new microservice version to a select subset of users before widespread implementation. This risk-averse approach aligns seamlessly with the principles of microservices architecture, ensuring controlled experimentation and minimizing the impact of potential issues.

By gradually rolling out updates and closely monitoring performance metrics and user feedback, organizations employing both canary deployments and microservices deployment methodologies can effectively identify and address issues on a small scale before risking the disruption of the entire user base.

This meticulous process guarantees that new microservice versions are introduced to users with accolades, not bugs, thereby harmonizing the twin objectives of controlled experimentation and seamless user experience in the dynamic landscape of software development.

Also, Consider factors like service criticality, risk tolerance, and desired rollout speed. And remember the power of automation! Utilize tools to orchestrate deployments, roll back faulty versions, and ensure a smooth, continuous flow of updates to your microservices landscape.

Best Practices for Microservices Testing and Deployment: A Winning Formula for Agility and Stability

The microservices revolution promises agility and resilience, but navigating the distributed complexities demands a strategic approach. Let’s explore best practices for microservices testing and deployment that ensure your independent services sing in perfect harmony, delivering a seamless user experience.

A. Adopting DevOps Practices:

Bridging the gap between development and operations is crucial. Embrace DevOps principles to foster communication, collaboration, and automation. Here’s how:

- Shared responsibility: Break down silos and encourage developers to own the entire lifecycle of their microservices, from testing to deployment.

- Infrastructure as code: Manage infrastructure through code for consistent environments and automated deployments.

- Continuous Integration and Continuous Delivery (CI/CD): Automate code integration, building, testing, and deployment to expedite updates and minimize risks.

B. Implementing Monitoring and Logging Solutions:

In a vast, distributed world, visibility is critical. Implement robust monitoring and logging solutions to keep a watchful eye on your microservices ecosystem:

- Real-time metrics: Track key performance indicators (KPIs) for each service, such as resource utilization, response times, and error rates.

- Distributed tracing: Understand how requests flow across services and identify performance bottlenecks or latency issues.

- Centralized logging: Aggregate logs from all services into a single platform for easy analysis and troubleshooting.

- Alerting and notifications: Set up automated alerts for critical metrics to proactively identify and address potential problems before they impact users.

C. Establishing a Robust Rollback Plan:

Even the best-laid plans can encounter problems. Have a clear and tested rollback plan ready to mitigate risks and minimize downtime:

- Version control: Maintain detailed records of every deployment, including code changes and configuration updates.

- Rollback procedures: Define clear steps to safely revert to a previous version of any service in case of issues.

- Testing rollbacks: Regularly test your rollback procedures to ensure they function smoothly and efficiently.

- Communication plan: Transparently communicate rollback plans and potential downtime to stakeholders and users.

Continuously experiment, gather feedback, and refine your approach as your microservices ecosystem evolves. By embracing DevOps practices, implementing robust monitoring and logging, and establishing a solid rollback plan, you’ll confidently navigate the complexities of microservices testing and deployment.

Summary

Adopting effective microservices testing and deployment strategies ensures modern software architectures’ seamless integration and functionality. As a dynamic and distributed approach to application development, microservices demand meticulous attention to testing to guarantee their reliability and performance.

By embracing comprehensive microservices testing, organizations can identify and address potential issues early in the development lifecycle, enhancing their applications’ overall stability and robustness.

On the other hand, microservices deployment requires a strategic approach to manage the complexity of multiple independently deployable services. Employing well-defined deployment strategies ensures a smooth and efficient release process, minimizing downtime and disruptions.

Leveraging continuous integration and continuous deployment (CI/CD) pipelines to automate and streamline the deployment of microservices is essential for enabling rapid and reliable releases.

In essence, the success of microservices lies in the meticulous execution of testing and deployment strategies. Organizations prioritizing these aspects are better positioned to harness the benefits of agility, scalability, and resilience that microservices architecture promises.

As the software development landscape evolves, adopting and refining microservices testing and deployment strategies will remain fundamental to delivering high-quality, reliable, and responsive applications in the dynamic digital era.

How can [x]cube LABS Help?

[x]cube LABS’s teams of product owners and experts have worked with global brands such as Panini, Mann+Hummel, tradeMONSTER, and others to deliver over 950 successful digital products, resulting in the creation of new digital revenue lines and entirely new businesses. With over 30 global product design and development awards, [x]cube LABS has established itself among global enterprises’ top digital transformation partners.

Why work with [x]cube LABS?

- Founder-led engineering teams:

Our co-founders and tech architects are deeply involved in projects and are unafraid to get their hands dirty.

- Deep technical leadership:

Our tech leaders have spent decades solving hard technical problems. Having them on your project is like instantly plugging into thousands of person-hours of real-life experience.

- Stringent induction and training:

We are obsessed with crafting top-quality products. We hire only the best hands-on talent. We train them like Navy Seals to meet our own standards of software craftsmanship.

- Next-gen processes and tools:

Eye on the puck. We constantly research and stay up-to-speed with the best technology has to offer.

- DevOps excellence:

Our CI/CD tools ensure strict quality checks to ensure the code in your project is top-notch.

Contact us to discuss your digital innovation plans, and our experts would be happy to schedule a free consultation!

1-800-805-5783

1-800-805-5783