1-800-805-5783

1-800-805-5783

When it comes to digital lending in 2026, speed is no longer just a competitive advantage; it is the baseline. But this velocity has also created a high-speed lane for loan fraud.

As instant credit approvals become the global standard, the window for verifying a borrower’s legitimacy has shrunk from days to mere milliseconds.

This acceleration has triggered an equally sophisticated evolution in criminal tactics.

Traditional detection systems, once heralded for their predictive power, are now being outpaced by “industrialized” schemes where fraudsters use generative AI to create perfect synthetic identities and deepfake documentation at scale.

To counter this, a fundamental shift is occurring in financial security: the transition from static machine learning models to autonomous AI agents.

While a traditional model provides a risk score, an AI agent possesses “agency”-an ability for comprehensive risk modeling to perceive data, reason through complex scenarios, and take immediate action to stop loan fraud before it enters the system.

By 2026, the primary threat to lenders has shifted from individual bad actors to highly automated “Fraud-as-a-Service” (FaaS) syndicates.

These organizations utilize adversarial AI to probe lending APIs for weaknesses, finding the exact threshold where a “soft” check turns into a “hard” rejection.

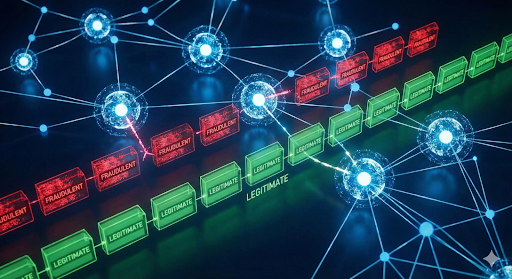

In this environment, loan fraud is no longer just a series of isolated incidents; it is a high-frequency, multi-dimensional attack.

Fraudsters now deploy “Digital Frankensteins”-synthetic identities that blend real, stolen Social Security numbers with AI-generated faces, voices, and even five-year-old social media histories.

For a legacy system, these personas appear as perfect “thin-file” customers. Detecting them requires a system that doesn’t just look for anomalies in a single application but reasons across the entire digital ecosystem in real time.

The core difference between a 2025-era model and a 2026-era AI agent lies in autonomy.

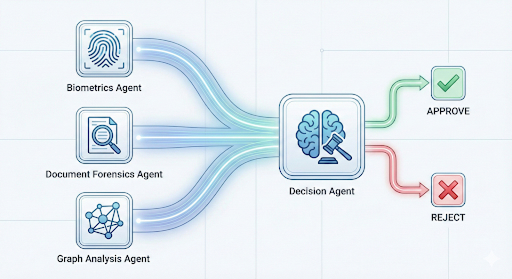

A model is a calculator; an agent is a digital investigator. When an application is submitted, an AI agent doesn’t just calculate a probability of loan fraud. Instead, it initiates a series of parallel “squad” actions.

These agents can autonomously decide to query external databases, trigger a liveness check, or cross-reference a borrower’s behavioral biometrics against thousands of known-good patterns. They operate within a “latency discipline,” where the entire investigative loop from ingestion to final decision is completed in under 100 milliseconds. This real-time capability is what allows lenders to offer “instant” products without being crippled by the skyrocketing costs of loan fraud.

Modern fraud prevention is now structured as an ecosystem of specialized agents, each focused on a specific nuance of the application process. This “squad” approach ensures that no single point of failure exists.

The first line of defense is an agent specialized in visual and linguistic forensics. In 2026, simple OCR is insufficient. This agent analyzes the “digital fingerprints” of uploaded documents, looking for pixel-level inconsistencies, GAN-generated textures in ID photos, or metadata that suggests a document was generated by a machine rather than scanned by a human. By identifying these microscopic signatures, the agent flags loan fraud that would be invisible to the human eye.

Identity is no longer about what you know (passwords) or what you have (SMS codes), but how you behave. This agent monitors the applicant’s interaction with the digital form. It measures typing cadence, mouse jitter, and the fluidity of navigation. A fraudster copy-pasting stolen information or a bot script interacting with the UI displays a “non-human” profile. When these signals deviate from the norm, the agent identifies a high-risk instance of loan fraud and triggers an immediate step-up authentication.

Fraudsters rarely attack once. They operate in clusters, using shared devices, Wi-Fi networks, or slightly modified addresses. The Graph Agent uses Graph Neural Networks (GNNs) to visualize connections between thousands of disparate applications. If a new application shares a “digital proximity” to a cluster of previously charged-off loans, the agent recognizes the pattern of an organized loan fraud ring, even if the individual application data points appear legitimate.

The “brain” of the system, the Orchestration Agent, synthesizes insights from all other agents. It weighs the conflicting signals. Perhaps the document looks valid, but the behavioral biometrics are suspicious. It then makes a real-time decision: approve, reject, or escalate. By managing these trade-offs autonomously, it maintains the balance between high-speed approvals and robust protection against loan fraud.

Synthetic identity fraud is perhaps the most difficult challenge of 2026. Because these identities use real components (like a valid SSN from a child or a deceased individual), they often bypass standard credit bureau checks.

AI agents combat this by using “link analysis” and external verification loops. For example, an agent might autonomously verify if a phone number has been historically associated with the applicant’s name across multiple service providers over several years. A synthetic identity, created only months ago, will lack this “digital longevity.” By piecing together a person’s life story across the web, AI agents can effectively “drown out” the noise of a fake persona and accurately pinpoint loan fraud.

As AI agents take over more decision-making power, regulatory scrutiny has increased. In 2026, “the AI said so” is not an acceptable legal defense. Lenders must be able to explain exactly why an application was flagged as loan fraud.

This has led to the rise of Explainable AI (XAI) as a core pillar of agentic design. When an agent blocks a transaction, it simultaneously generates a natural language justification. For instance: “Application flagged due to high-velocity device reuse across three different identities and a 92% match with a known document-tampering template.” This level of transparency ensures that while the process is automated, it remains under the strict governance of risk officers and regulators.

Furthermore, these agents are governed by “Reward Models” that prevent them from becoming overly aggressive. If an agent blocks too many legitimate customers (false positives), the reinforcement learning loop adjusts its thresholds. This ensures that the fight against loan fraud doesn’t inadvertently destroy the customer experience.

The battle doesn’t end at the point of approval. In 2026 and beyond, AI agents operate throughout the entire loan lifecycle. A borrower who was legitimate at the time of application may later have their account “taken over” by a criminal.

Post-disbursement agents continuously monitor account behavior for “early warning indicators.” Sudden shifts in spending patterns, changes in login locations, or unusual contact information updates trigger the agents to re-verify the identity. This continuous, real-time vigilance is the final piece of the puzzle, ensuring that loan fraud is caught even if the initial application was successful.

The lending industry has reached a point where human intervention alone cannot scale to meet the speed and sophistication of modern criminals. AI agents represent the next generation of defense: a proactive, autonomous, and incredibly fast layer of intelligence that secures the digital economy.

By integrating multi-agent frameworks that handle everything from behavioral biometrics to complex graph analysis, financial institutions can finally close the gaps that fraudsters have exploited for years. In the face of industrialized loan fraud, the only way to protect the future of lending is to empower the silent sentinels that never sleep.

Traditional software relies on static “if-then” rules and historical data to flag suspicious activity. AI agents, however, are autonomous; they can reason through new, never-before-seen tactics, collaborate with other agents, and take real-time actions like triggering a video liveness check to stop loan fraud instantly.

Yes. AI agents use “digital longevity” checks and link analysis to see if an identity has a consistent history across multiple platforms and years. Synthetic identities usually lack this deep digital footprint, allowing agents to identify loan fraud even when the Social Security number and name are “technically” valid.

Actually, the opposite is true. Because AI agents analyze thousands of data points, including behavioral biometrics and network patterns, they are much more precise than traditional systems. This results in fewer legitimate customers being blocked, significantly improving the user experience while still preventing loan fraud.

Yes. Modern AI agents are built with Explainable AI (XAI) frameworks. This means they provide a clear, auditable trail and a natural language explanation for every decision. This transparency is essential for meeting the strict regulatory requirements surrounding loan fraud prevention and fair lending.

In 2026, top-tier AI agent systems operate with a “latency discipline” of under 100 milliseconds. This ensures that the deep-dive investigation into potential loan fraud occurs in the background without the customer ever experiencing a delay in their application process.

At [x]cube LABS, we craft intelligent AI agents that seamlessly integrate with your systems, enhancing efficiency and innovation:

Integrate our Agentic AI solutions to automate tasks, derive actionable insights, and deliver superior customer experiences effortlessly within your existing workflows.

For more information and to schedule a FREE demo, check out all our ready-to-deploy agents here.